In an era of rapid AI evolution, industries are increasingly eyeing generative AI as a transformative force capable of crafting groundbreaking materials and personalized content. Yet, integrating these AI models into production isn’t straightforward. This blog post delves into this journey, focusing on operationalizing generative AI, with a keen eye on Large Language Model Operations (LLMOps) and their distinction from traditional Machine Learning Operations (MLOps) and Artificial Intelligence Operations (AIOps), all within an AWS-centric framework. Emphasizing on AI for IT Ops, it explores the integration of AIOps solutions, offering comprehensive insights into the AIOPs meaning and its full form which is Artificial Intelligence Operations. The article also sheds light on various AIOps solutions and how they pave the way for enhanced AIOps insights, discussing the role of leading AIOps companies and AIOps vendors in shaping this dynamic field.

What is Generative AI?

Generative AI models stand out for their ability to discern and replicate patterns in vast datasets, enabling them to produce new, realistic outputs. These outputs range from image generation to text that mirrors human writing. The true strength of generative AI is in its capacity to innovate, automate, and personalize on a scale previously unattainable.

The Role of MLOps and AIOps

Machine Learning Operations (MLOps) and Artificial Intelligence Operations (AIOps) combine machine learning, DevOps, and data engineering, focusing on automating the machine learning lifecycle, including data collection, model training, and deployment. This integration is crucial for effectively incorporating generative AI and LLMOps (Large Language Model Operations) into scalable production environments, particularly within an AWS-centric framework. AIOps, which stands for Artificial Intelligence Operations, plays a vital role here. It involves the use of AI tools to analyze data from various IT operations tools and devices, distinguishing itself from traditional MLOps. Utilizing AIOps and other solutions enhances AI for IT Ops by automating and improving IT operations processes, thus emphasizing the importance of AIOps in enhancing operational efficiency. This integration not only clarifies the full form and meaning of AIOps but also underlines why AIOps is crucial in the current technological landscape.

Challenges of Operationalizing Generative AI

Operationalizing generative AI models, especially within the framework of MLOps and AIOps, presents unique challenges:

- Data quality and bias: Generative AI models, including those used in LLMOps (Large Language Model Operations), are highly sensitive to the quality of data they train on. Biased training data leads to biased outputs, necessitating careful data curation and cleaning to ensure fair and unbiased outputs, a crucial aspect in AI for IT Ops.

- Model explainability: Understanding decision-making in generative AI models is complex. This complexity poses challenges in debugging and assuring the models generate desired outputs, a significant concern in the AWS-centric development and deployment environments.

- Safety and security: There’s a risk of generative AI, such as AIOps models, being used to create malicious content like deepfakes or phishing emails. Implementing robust safeguards is essential to prevent misuse, a concern shared by various AIOps vendors and companies.

- Computational cost: The computational expense of training and running generative AI models is substantial. Balancing these costs is key for scalable deployment in production, an integral part of AI for IT Ops strategies.

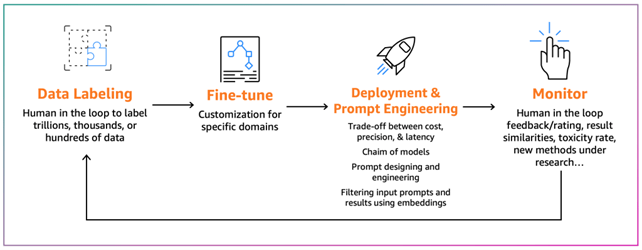

LLMOps Implementation: Adapting to MLOps and AIOps

While powerful, pre-trained models in Large Language Model Operations (LLMOps), a subset of AI for IT Ops, can sometimes struggle with specialized tasks. Fine-tuning bridges this gap by allowing adaptation of an existing model to specific needs. This involves providing the model with relevant examples, like financial reports and their summaries, for a specific task. The model then learns from these examples, adjusting its internal workings to excel in that domain. This process, akin to adding a specialized tool to a Swiss army knife, empowers leveraging the pre-trained model’s foundation within an AWS-centric framework, while tailoring it to become an expert in your specific area.

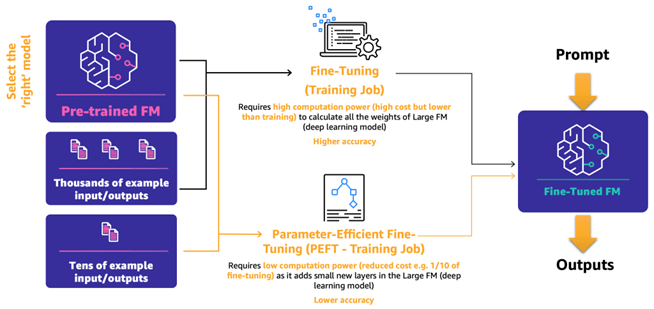

Choosing the right approach to fine-tune your deep learning model can significantly impact its performance and efficiency. Here’s a breakdown of two popular methods:

Traditional Fine-Tuning:

Method: This method involves retraining the entire model, adjusting the weights and biases of all layers based on a new dataset and labeled data.

Advantages: High Accuracy: This approach can achieve the best possible accuracy when sufficient labeled data is available.

Disadvantages:

- Computationally Expensive: Training the entire model can be resource-intensive, requiring significant computational power and time.

- Data Requirements: Large amounts of labeled data are necessary for optimal performance.

Parameter-Efficient Fine-Tuning (PEFT):

Method: This approach introduces small additional layers to the existing model instead of retraining the entire network. These new layers learn task-specific information, adapting the model to the new data.

Advantages:

- Faster Training: PEFT requires less computational power and training time compared to traditional fine-tuning.

- Lower Data Requirements: PEFT can achieve good results with smaller datasets.

Disadvantages:

Potentially Lower Accuracy: While efficient, PEFT might not achieve the same level of accuracy as traditional fine-tuning, especially with complex tasks.

The following diagram illustrates these mechanisms.

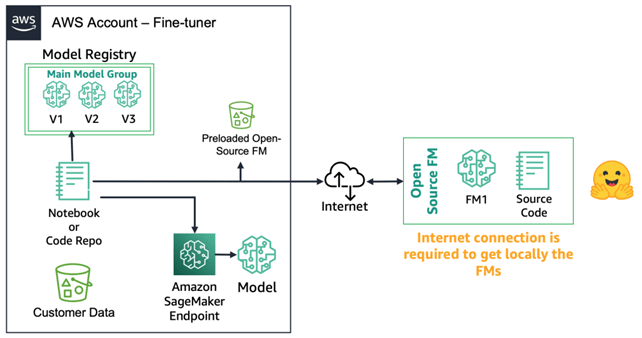

Deploying Fine-Tuned Open-Source Models

Deploying fine-tuned foundation models takes different paths for open-source and proprietary options. Open-source models offer greater flexibility and control. Fine tuners can access the model’s code and download it from platforms like Hugging Face Model Hub, allowing for deep customization and deployment on platforms like Amazon Amazon SageMaker endpoint. This process requires an internet connection.

To support more secure environments (such as for customers in the financial sector), you can download the model on premises, run all the necessary security checks, and upload them to a local bucket on an AWS account. Then, the fine tuners use the FM from the local bucket without an internet connection. This ensures data privacy, and the data doesn’t travel over the internet.

The following diagram illustrates this method.

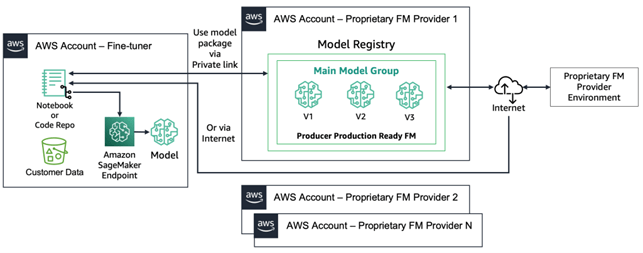

Fine-Tuned Proprietary Models

Fine-tuning pre-trained models unlocks immense potential, but concerns arise when dealing with proprietary models and sensitive customer data. Here’s a breakdown of the challenges and how Amazon Bedrock offers a secure solution:

Challenges with Proprietary Models:

- Limited Access: Fine-tuners lack direct access to the model’s inner workings, hindering customization and raising concerns about data privacy.

- Remote Data Usage: Fine-tuning often necessitates transferring customer data to the proprietary model provider’s accounts, raising security and compliance questions.

- Multi-Tenancy Issues: Sharing a model registry among multiple customers creates potential data leakage risks, especially when serving personalized models.

Amazon Bedrock to the Rescue:

- Secure Enclave: Amazon Bedrock addresses these concerns by providing a secure enclave where fine-tuning occurs. Customer data never leaves the user’s account, ensuring complete privacy and control.

- Data Isolation: Each fine-tuner operates within their own isolated environment, eliminating multi-tenancy risks and ensuring data remains segregated.

Conclusion

Operationalizing generative AI models with LLMOps (Large Language Model Operations) presents unique challenges and opportunities for industries aiming to leverage AI’s power. Understanding the nuances of generative AI, MLOps, and fine-tuning techniques is crucial for organizations to successfully integrate these models into their production environments, ensuring data privacy, security, and accuracy.

With the right approach to fine-tuning and deployment, businesses can unlock the full potential of generative AI models and drive innovation across various industries. Harnessing the capabilities of platforms like Amazon SageMaker and Amazon Bedrock within an AWS-centric framework, organizations can navigate the complexities of operationalizing generative AI with confidence and efficiency.

As AI continues to evolve, particularly in the realms of AI for IT Ops and through solutions provided by AIOps vendors, the operationalization of generative AI models will play a pivotal role in shaping the future of industries worldwide. Embracing these technologies and adopting best practices for implementation, including leveraging insights from AIOps companies, will be key to staying competitive in an increasingly AI-driven landscape