Introduction

In our increasingly digital world, tapping into the transformative capabilities of artificial intelligence (AI) is more accessible than ever. However, efficiently managing AI workloads remains a formidable challenge for many businesses and developers. Enter our cutting-edge web application—a robust solution serving as a microservice within an Elastic Kubernetes Service (EKS) cluster, complete with high-performance accelerator nodes tailored for AI workloads. Not only does this application simplify AI deployment, but it also offers unmatched flexibility by granting users the freedom to choose their preferred Language Model (LLM) provider from an array of sources, including SageMaker and Bedrock.

Streamlining AI Workflows with Streamlit

Streamlit, a renowned Python library lauded for its simplicity and agility, is the backbone of our web application. Designed to optimize AI workflows, our application operates as a microservice within an EKS cluster, complete with accelerator nodes. Furthermore, this setup guarantees the rapid and efficient processing of AI workloads, seamless performance and swift results for your projects.

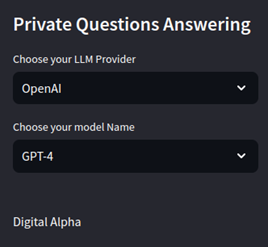

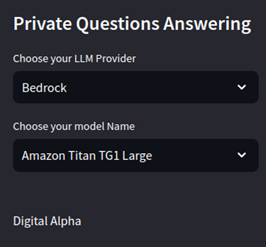

Choosing Your LLM Provider

One of the key features of our application is the ability to choose your LLM provider. Thus, depending on your specific use case and requirements, you can select your LLM provider from a variety of sources:

Open AI Workflows:

Tap into the remarkable capabilities of GPT-3 and its successors, perfect for a wide range of natural language processing tasks.

SageMaker Endpoint:

Amazon’s SageMaker presents a robust ecosystem for building, training, and deploying machine learning models, offering versatility and scalability.

Bedrock:

Amazon’s Bedrock stands as a platform-agnostic solution, providing the ultimate flexibility and control over your AI infrastructure, allowing you to tailor it to your specific needs.

Local Hosting:

If you possess your own custom-trained models, local hosting integration empowers you to incorporate your unique AI assets effortlessly into our application, aligning perfectly with your requirements.

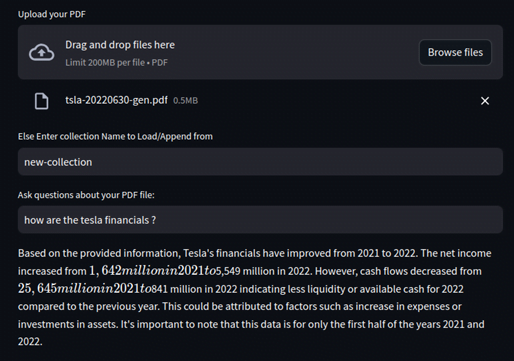

Harnessing Your Vector Database

Easily tap into your treasure trove of data or effortlessly upload fresh documents for seamless AI processing. To embark on this journey, all you need to do is furnish the address of your vector database and specify the preferred collection. This feature is particularly indispensable for organizations managing vast datasets, seeking efficient document retrieval and high-performance question-answering capabilities.

Seamless Question Answering

With your data source configured, the path to question-answering becomes a breeze. Whether your quest involves extracting insights from pre-existing documents or delving into freshly uploaded PDFs, our application is your steadfast companion. Effortlessly input your queries and watch as our integrated AI models swiftly provide precise answers, enhance your ability to make well-informed decisions with unmatched speed and accuracy.

Conclusion

In the dynamic realm of AI, efficiency and flexibility reign supreme. Our web application, functioning seamlessly as a microservice within an EKS cluster equipped with accelerator nodes. Empowers users to effortlessly integrate AI into their workflows. With the freedom to choose your preferred LLM provider, connect to your vector database, and perform in-depth question-answering on documents, we’ve created a versatile tool catering to a myriad of AI needs.

Whether you’re a business striving to enhance customer support or a developer pioneering cutting-edge AI projects, our Streamlit-based web application delivers the simplicity and power required to fully harness the potential of artificial intelligence. Bid farewell to the intricacies of managing AI workloads and usher in a new era of streamlined, efficient AI solutions. Embark on this journey today and witness the future of AI at your fingertips.