Introduction:

In today’s era of digital transformation, effective data integration and management are essential for enterprises seeking to leverage the power of AI. This blog delves into how a completely open-architecture, open-source powered solution can revolutionize your internal knowledge bases through Retrieval-Augmented Generation (RAG). Our Knowledgebases Integration Platform for Enterprise AI, available on AWS Marketplace, seamlessly integrates advanced technologies to enhance data handling and retrieval processes. Built on open-source tools, this platform offers a flexible, plug-and-play experience that empowers you to choose the best tools for your specific needs, providing a robust framework for optimizing data integration and management in your organization.

Streamlining Data Integration

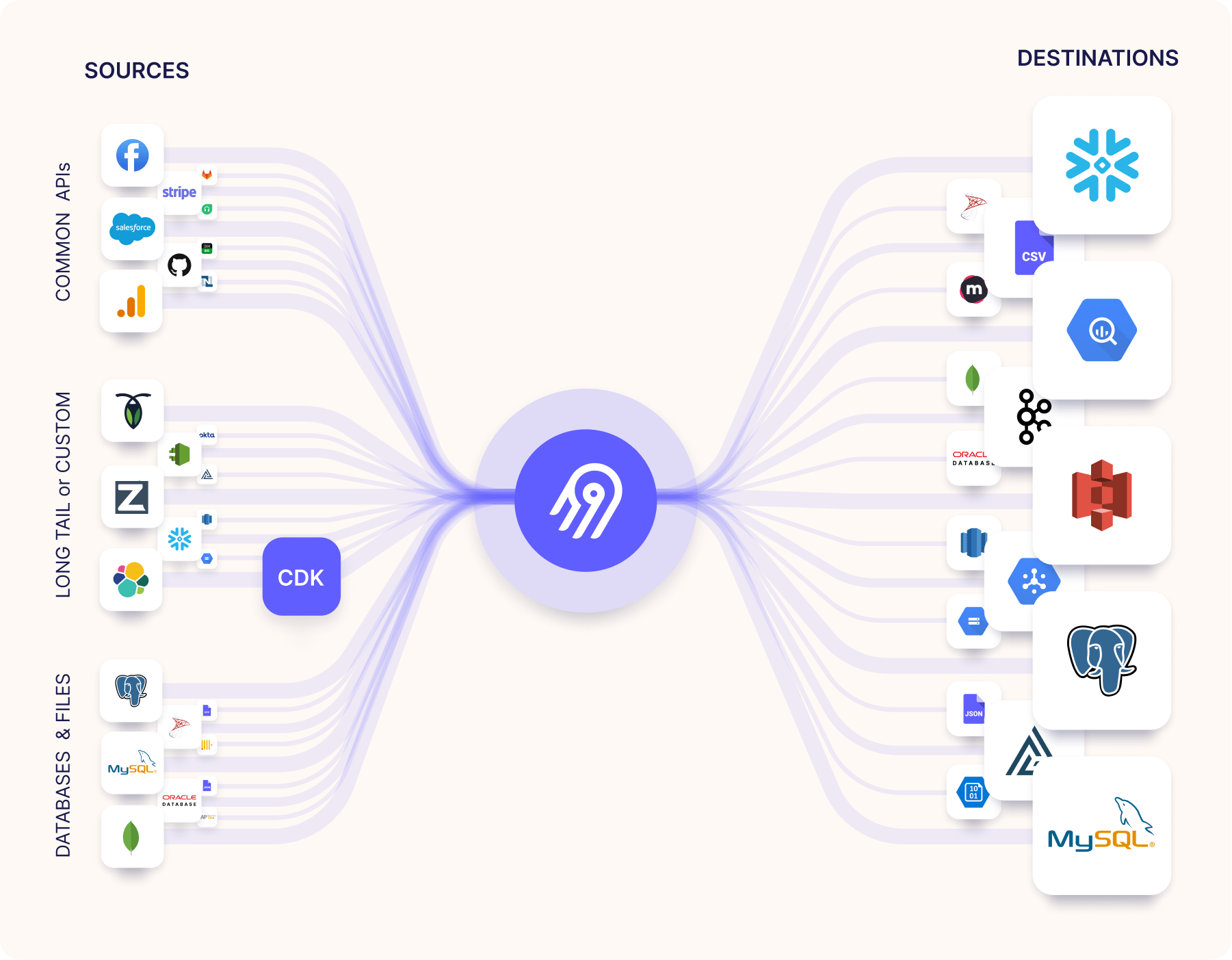

As our offering is a completely open-architecture, open-source powered one, we have used Airbyte as our choice of tool for data integration. Airbyte is an open-source data integration platform designed to consolidate data from disparate sources into a unified system. It supports numerous connectors, allowing you to sync data from databases, APIs, and cloud services efficiently.

Key Features:

- Extensive Connector Library: Supports a wide range of data sources and destinations.

- Custom Connector Development: Easy to create and integrate custom connectors.

- Open Source Flexibility: Community-driven with transparency and adaptability.

- Scalability: Handles large-scale data integration tasks with ease.

Please find our more-detailed blog on Airbyte and Data Integration here: Effortless Data Integration: Any Source to Destination

The Power of Vector Databases

Vectors, in the context of machine learning and AI, represent complex data points such as text, images, or any high-dimensional data that require sophisticated indexing and search capabilities. As our offering is a completely open-architecture, open-source powered one, we have used Qdrant as our choice of tool for vector databases. Qdrant stands out by providing robust tools to store, manage, and query these vectors, making it an essential component for applications that rely on similarity search and real-time AI-driven insights. Below image tells the Ecosystem of Qdrant:

Key Features:

- High-Performance Vector Search:

- Efficient Similarity Search: Qdrant is optimized to perform similarity searches quickly, allowing for the rapid retrieval of vectors that are most similar to a given query vector. This is crucial for applications such as recommendation systems, where finding items similar to user preferences in real-time is essential.

- Approximate Nearest Neighbor (ANN) Search: By using ANN algorithms, Qdrant balances search accuracy with speed, ensuring quick responses even with large datasets.

- Scalable Architecture:

- Horizontal Scalability: Qdrant can scale out by adding more nodes to the cluster, handling increasing data loads and more complex queries without compromising performance.

- Elastic Management: Dynamic scaling capabilities allow Qdrant to adapt to changing workloads, ensuring efficient resource utilization.

- Integrations:

- Machine Learning Frameworks: Qdrant integrates seamlessly with popular machine learning frameworks such as TensorFlow, PyTorch, and Hugging Face Transformers. This allows for easy deployment of machine learning models that generate vectors directly into Qdrant.

- APIs and SDKs: Provides robust APIs and SDKs for various programming languages, enabling developers to integrate Qdrant into their applications effortlessly.

- Real-Time Updates:

- Dynamic Data Ingestion: Qdrant supports real-time data ingestion, allowing new data points to be added, updated, or deleted on the fly. This is particularly useful for applications that require up-to-date information, such as live recommendation systems.

- Consistent Performance: Maintains high query performance even as the underlying data continuously changes, ensuring reliable and accurate results.

Qdrant’s powerful vector database capabilities make it a critical tool for modern AI and machine learning applications. Its ability to perform high-performance similarity searches, scale dynamically, integrate with various ML frameworks, and handle real-time data updates ensures it can meet the demands of diverse and complex use cases. By leveraging Qdrant, enterprises can unlock new insights, improve their AI-driven applications, and enhance their overall data management strategies.

RAG – Revolutionizing Internal Knowledge-Bases

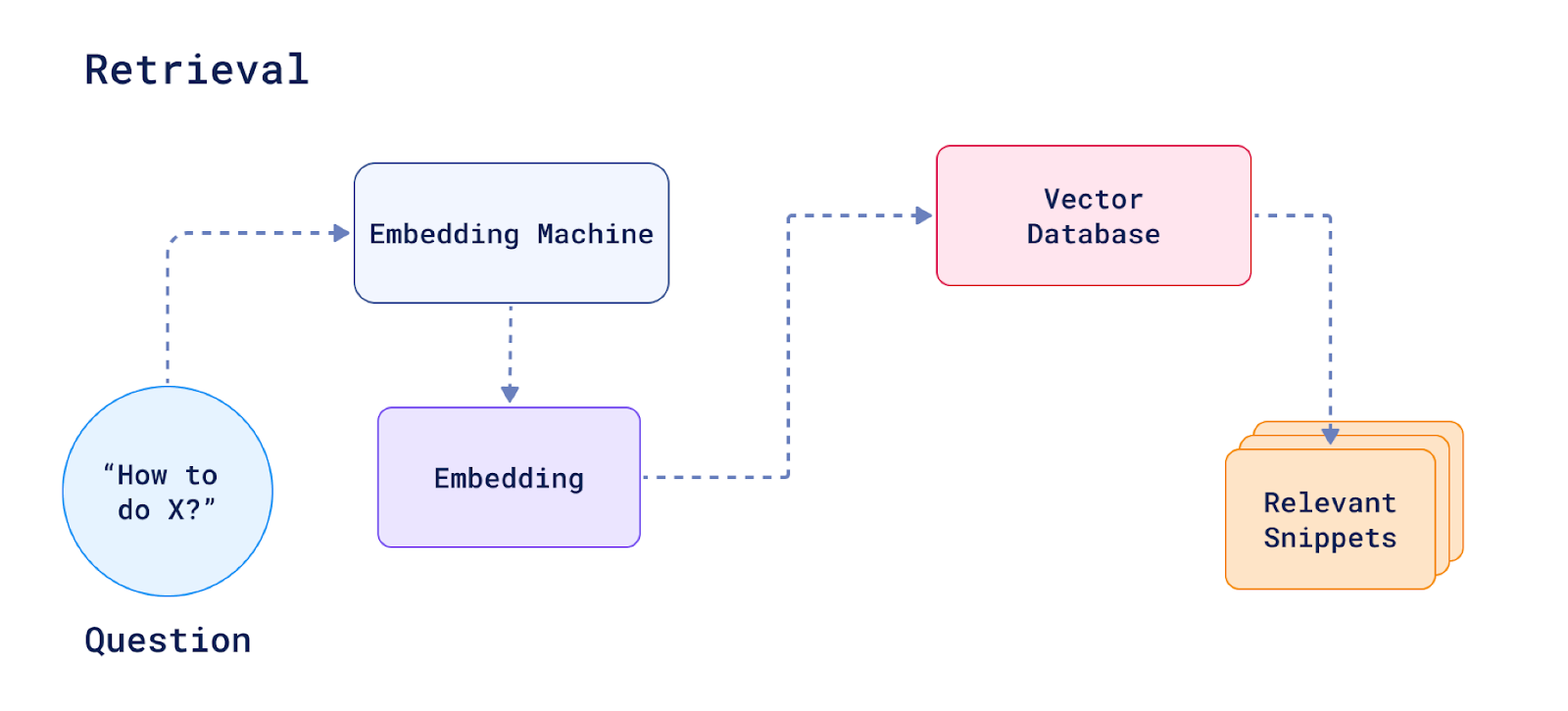

Retrieval-Augmented Generation (RAG) is a cutting-edge approach that combines the strengths of retrieval-based models and generative models to enhance the performance of knowledge-based applications. By integrating data retrieval mechanisms with generative models, RAG can provide more accurate, contextually relevant, and comprehensive responses to queries. This makes it an ideal solution for improving internal knowledge bases, customer support systems, and various AI-driven applications. Below is how RAG works in general.

How RAG Works

- Data Integration:

- Aggregation: Using Airbyte, data from various sources such as databases, APIs, and cloud services is aggregated into a centralized repository. This ensures that all relevant data is available for retrieval and analysis.

- Transformation: The aggregated data is transformed into a consistent format, facilitating easier processing and analysis.

- Vectorization:

- Embedding Generation: The textual data is converted into high-dimensional vectors using machine learning models, such as BERT, GPT, or other transformer-based models. These vectors capture the semantic meaning of the data, allowing for more nuanced and accurate searches.

- Contextual Representation: By leveraging advanced models, the vectors also capture contextual information, improving the relevance of search results.

- Storage and Retrieval:

- Storing Vectors: The generated vectors are stored in Vector Database, a high-performance vector database optimized for similarity searches. As any Vector-DB will have efficient indexing and retrieval capabilities, it ensures fast and accurate searches.

- Retrieving Relevant Data: When a query is made, the Vector-DB quickly retrieves vectors that are most similar to the query vector. This retrieval process is key to providing contextually relevant information.

- Generation:

- Combining Data: The retrieved vectors are combined with generative models to produce comprehensive and accurate responses. Generative models, such as GPT-4, use the context provided by the retrieved vectors to generate responses that are both relevant and informative.

- Enhanced Responses: The combination of retrieved data and generative models ensures that the responses are not only accurate but also enriched with relevant details and context.

Potential Use Cases for RAG

- Customer Support:

- Automated Responses: RAG can be used to automate customer support by generating accurate and contextually relevant responses to customer inquiries. This improves response times and customer satisfaction.

- Knowledge Base Enhancement: By integrating RAG, companies can enhance their knowledge bases, making it easier for support agents to find and provide accurate information to customers.

- Internal Documentation:

- Efficient Information Retrieval: Employees can quickly retrieve relevant documents and information from a large corpus of internal documents, improving productivity and decision-making.

- Context-Aware Search: RAG enables context-aware searches, providing employees with the most relevant and contextually appropriate documents based on their queries.

- Knowledge Management:

- Centralized Knowledge Repository: Organizations can create a centralized knowledge repository that is easily searchable and continuously updated with new information.

- Improved Collaboration: By making it easier to access and share knowledge, RAG enhances collaboration across teams and departments.

- Healthcare:

- Medical Records: Healthcare providers can use RAG to retrieve and analyze patient records, aiding in diagnosis and treatment planning.

- Clinical Research: RAG can assist in clinical research by providing researchers with relevant studies and data, facilitating evidence-based decision-making.

RAG, powered by Airbyte and Qdrant, offers a revolutionary approach to managing and utilizing internal knowledge bases. By combining efficient data integration, advanced vector storage, and powerful generative models, RAG can transform how organizations access and use their data. Whether for customer support, internal documentation, or research, RAG provides a robust solution that enhances the accuracy, relevance, and efficiency of information retrieval and generation.

Introducing Our Knowledge-bases Integration Platform for Enterprise AI

Our Knowledge-bases Integration Platform for Enterprise AI, available on AWS Marketplace, brings together the powerful capabilities of Airbyte and Qdrant to create a comprehensive solution for managing and utilizing internal knowledge bases. This platform is designed to streamline the setup process, providing you with all the necessary infrastructure and tools to leverage Retrieval-Augmented Generation (RAG) effectively.

Benefits of Using Our Platform:

- Improved Data Management:

- Centralized Repository: By integrating data from multiple sources into a single repository, you gain a comprehensive view of your data, making it easier to manage and analyze.

- Consistent Updates: Automated data syncs ensure that your repository is always up-to-date with the latest information, improving the accuracy and reliability of your knowledge bases.

- Enhanced AI Capabilities:

- Advanced Search and Retrieval: Qdrant’s vector search capabilities enable more accurate and relevant searches, enhancing the effectiveness of your AI applications.

- Context-Aware Responses: Implementing RAG allows you to generate responses that are not only accurate but also enriched with context, providing users with more informative and useful answers.

- Scalability and Flexibility:

- Elastic Scaling: The platform’s ability to scale dynamically ensures that you can handle increasing data volumes and processing demands without compromising performance.

- Customizable: The open-source nature of Airbyte and Qdrant allows for customization and extension, enabling you to tailor the platform to your specific needs.

Explore our Knowledge-bases Integration Platform for Enterprise AI on AWS Marketplace and transform your organization’s data integration and management strategy today. Follow our Setup Guide to get started and unlock the full potential of your enterprise AI initiatives.

Our Knowledge-bases Integration Platform for Enterprise AI offers a robust and scalable solution for managing and utilizing internal knowledge bases. By integrating Airbyte for data integration and Qdrant for vector storage, and leveraging RAG, you can significantly enhance your AI capabilities, improve data management, and streamline information retrieval processes.